AB Testing

A/B testing, often referred to as split testing, is a critical method in digital marketing and product development that enables businesses to compare two distinct versions of a webpage, app, or product. The primary objective of A/B testing is to identify which version yields better performance metrics, particularly in terms of conversion rates and user engagement. This systematic approach facilitates data-driven decisions, vital for optimizing the user experience and improving overall business outcomes. Recently, numerous companies have recognized the importance of A/B testing tools as a foundational element in their conversion rate optimization (CRO) strategies, with many conducting multiple tests each month to refine their offerings continuously. In an A/B test, a control variant (A) is contrasted with a test variant (B), allowing marketers to examine results through statistical analysis to determine whether observed differences are significant or merely due to chance. The evolving landscape of A/B testing now incorporates advanced technologies such as artificial intelligence, which enhances data analysis and predictive insights, making testing processes more efficient. The integration of AI allows businesses to personalize tests for specific audience segments, revealing unique user preferences that further optimize conversion strategies. As companies increasingly break down silos between product and marketing teams, the role of A/B testing in driving successful outcomes in digital experiences remains as crucial as ever.

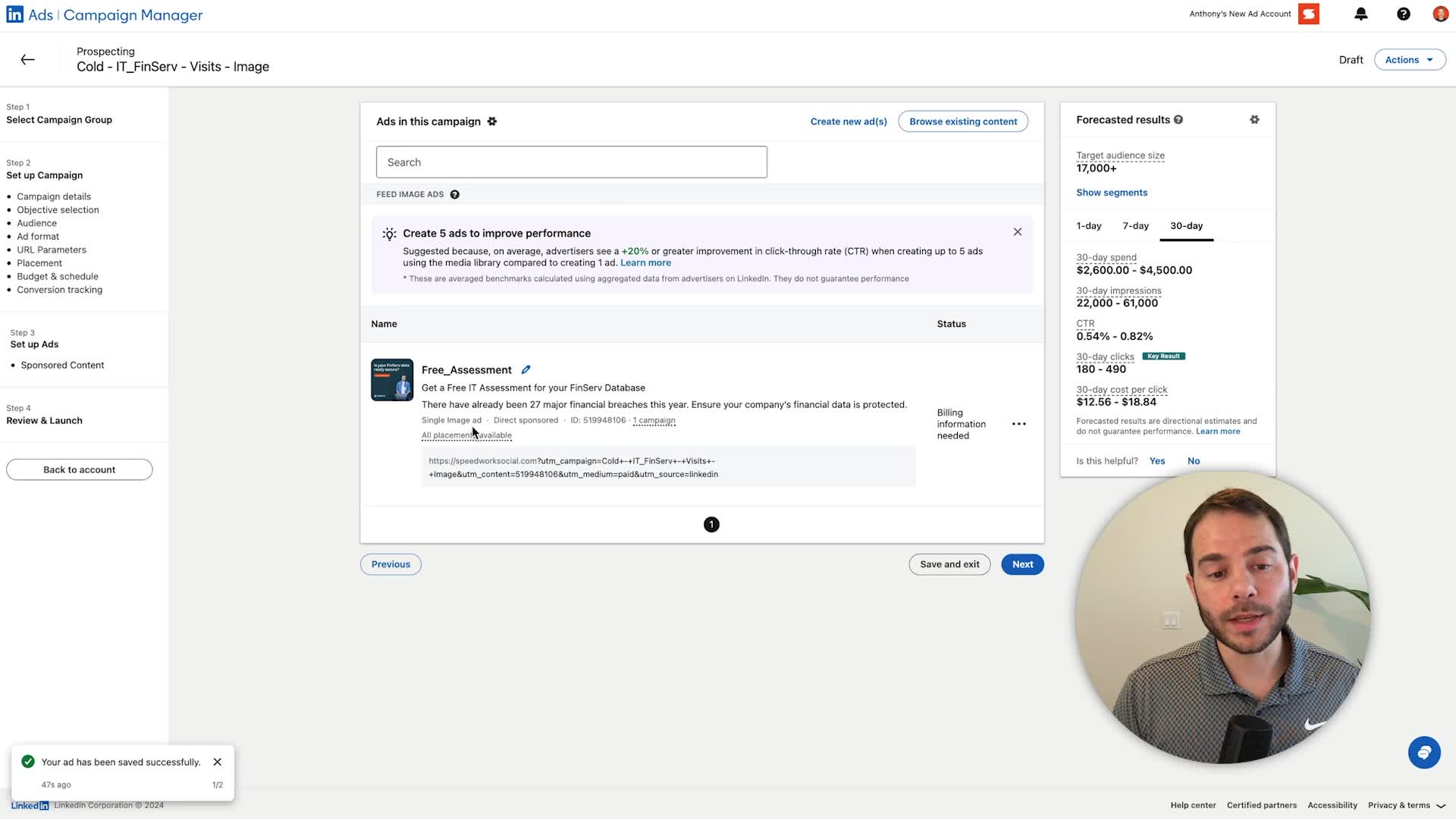

What are the key tips for creating effective LinkedIn ad campaigns?

For effective LinkedIn ad campaigns, avoid overly broad audience targeting as LinkedIn charges per click, making untargeted clicks costly. Instead, use specific parameters like job titles and industries, aiming for audience sizes of 30-80k people for optimal targeting. Turn off audience expansion and audience network features initially to maintain precise targeting and prevent displaying ads on out-of-context third-party websites. Use manual bidding to control costs and maintain predictable spending per click. Finally, split test your ad creatives by comparing different offers, images, headlines and copy to determine which elements perform best.

Watch clip answer (01:14m)What is A/B testing?

A/B testing, also known as split testing or bucket testing, is a method of comparing two versions of a webpage or app to determine which one performs better. It's essentially an experiment where variants are shown to users at random, with statistical analysis used to evaluate performance against conversion goals. At Optimizely, they've conducted nearly 2 million tests across over 9,000 brands. The process involves creating a modified version of a page (ranging from a simple button change to a complete redesign), then randomly showing visitors either the original control or the variation. User engagement is measured and analyzed to determine whether changes had positive, negative, or neutral effects on behavior. This method allows teams to make data-driven improvements to user experiences and optimize conversion rates over time.

Watch clip answer (01:51m)What is A/B testing and how does it work?

A/B testing is an invaluable yet simple tool that helps businesses understand customer behaviors and optimize content. It functions like a marketing experiment where you split your audience to test two different versions of the same element. By comparing performance of variations in elements like email subject lines, landing page designs, or CTA placements, you can determine which version better increases engagement, sales, or other key metrics. This data-driven approach enables businesses to make informed decisions that positively impact their goals and improve conversion rates.

Watch clip answer (09:31m)What is A/B testing and how does it work?

A/B testing is an invaluable marketing experiment that helps businesses understand customer behaviors and optimize content. It works by splitting your audience to test two different versions of the same thing - like email subject lines, landing pages, or CTAs - to determine which performs better. The goal is to gather data that allows you to make informed decisions to positively impact business outcomes such as increased engagement, sales, and conversion rates. When conducting A/B tests, it's crucial to test one element at a time, ensure adequate sample size, and verify statistical significance before implementing changes.

Watch clip answer (09:10m)What tools can be used to optimize conversion rates and understand user interactions?

Neil Patel recommends using tools like Crazy Egg to analyze how visitors interact with your website. These analytics tools reveal where users engage, where they get stuck, and where they don't interact, providing crucial insights for conversion rate optimization (CRO). With Crazy Egg's WYSIWYG editor, marketers can easily run A/B tests without complex technical knowledge. Patel also emphasizes the importance of multi-touch attribution in today's digital environment, particularly with video content which helps build brand awareness and generate long-term sales while improving search visibility on platforms like Google.

Watch clip answer (00:34m)How does the tool Crazy help with conversion rate optimization?

Crazy is a powerful tool for conversion rate optimization that provides valuable insights into user behavior. It shows where website visitors engage with content, where they don't engage, and where they get stuck during their browsing journey. This visual data helps identify pain points in the user experience that may be hindering conversions. Additionally, Crazy offers a WYSIWYG (What You See Is What You Get) editor that allows marketers to easily implement changes based on these insights. Users can simply click buttons to run A/B tests without requiring complex technical knowledge, making the optimization process more efficient and accessible for businesses looking to improve their conversion rates.

Watch clip answer (00:11m)