AI Ethics and Governance

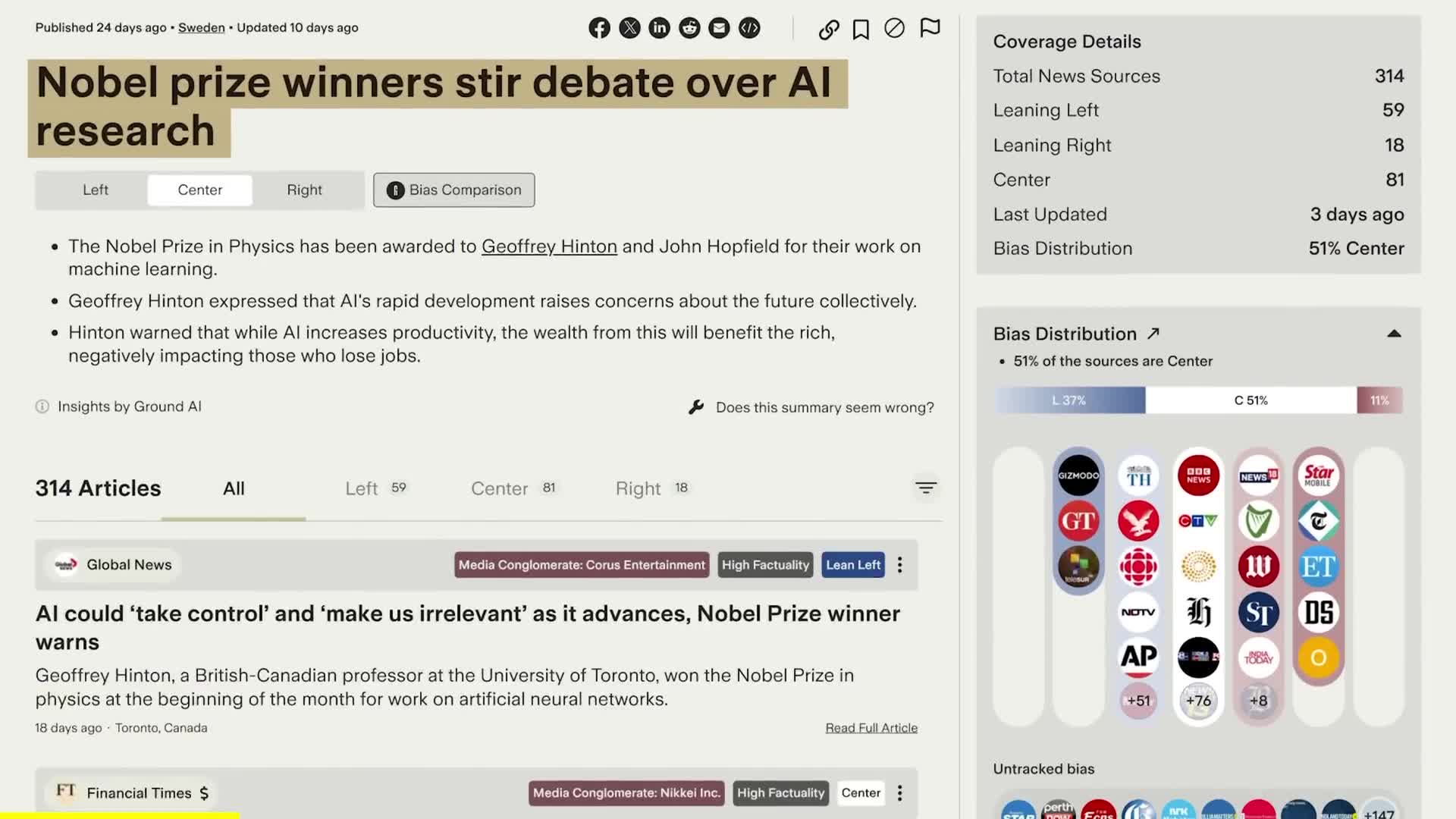

AI ethics and governance represent an increasingly vital area of focus as the technology behind artificial intelligence (AI) advances rapidly, raising profound ethical questions and societal implications. At its core, AI ethics seeks to establish a framework that promotes responsible AI development by aligning the creation and application of AI systems with human values, legal regulations, and public welfare. Essential principles within this framework include transparency—ensuring that AI decision-making processes are clear and understandable—accountability, and fairness, aimed at eliminating bias and discrimination in AI outputs. Recent discussions have emphasized the importance of comprehensive and adaptive AI governance policies that incorporate risk assessments and public accountability to mitigate the potential negative impacts of AI technologies. Furthermore, establishing robust AI governance not only involves crafting ethical frameworks but also integrating practical mechanisms and organizational processes that ensure compliance with legal standards and ethical norms. This governance must reflect the diverse cultural values and perspectives of all stakeholders, promoting inclusivity in the policymaking process. Initiatives like UNESCO’s global AI ethics recommendation and the OECD AI Principles have emerged as key benchmarks advocating for human rights, democratic values, and privacy throughout AI's lifecycle. As the complexities of digital technologies evolve, so too does the challenge of balancing innovation with necessary safeguards. Continuous dialogues among stakeholders are essential to developing effective regulations that support ethical AI deployment while fostering trust and accountability in this evolving landscape.

How does data quantity affect the accuracy of AI prediction models?

The accuracy of AI prediction models directly correlates with the quantity and quality of data provided. As Johnny Harris explains, 'The more data you give it or train it on, the more accurate its results are.' This principle applies across various predictive scenarios, particularly in forecasting natural phenomena like hurricanes. For hurricane prediction specifically, incorporating extensive data on sea surface temperature, air pressure, wind speed, humidity levels, ocean heat content, and historical storm patterns significantly enhances predictive accuracy. These comprehensive data inputs enable AI systems to make more precise forecasts about a hurricane's path and characteristics, demonstrating how data-rich environments produce more reliable predictive outcomes.

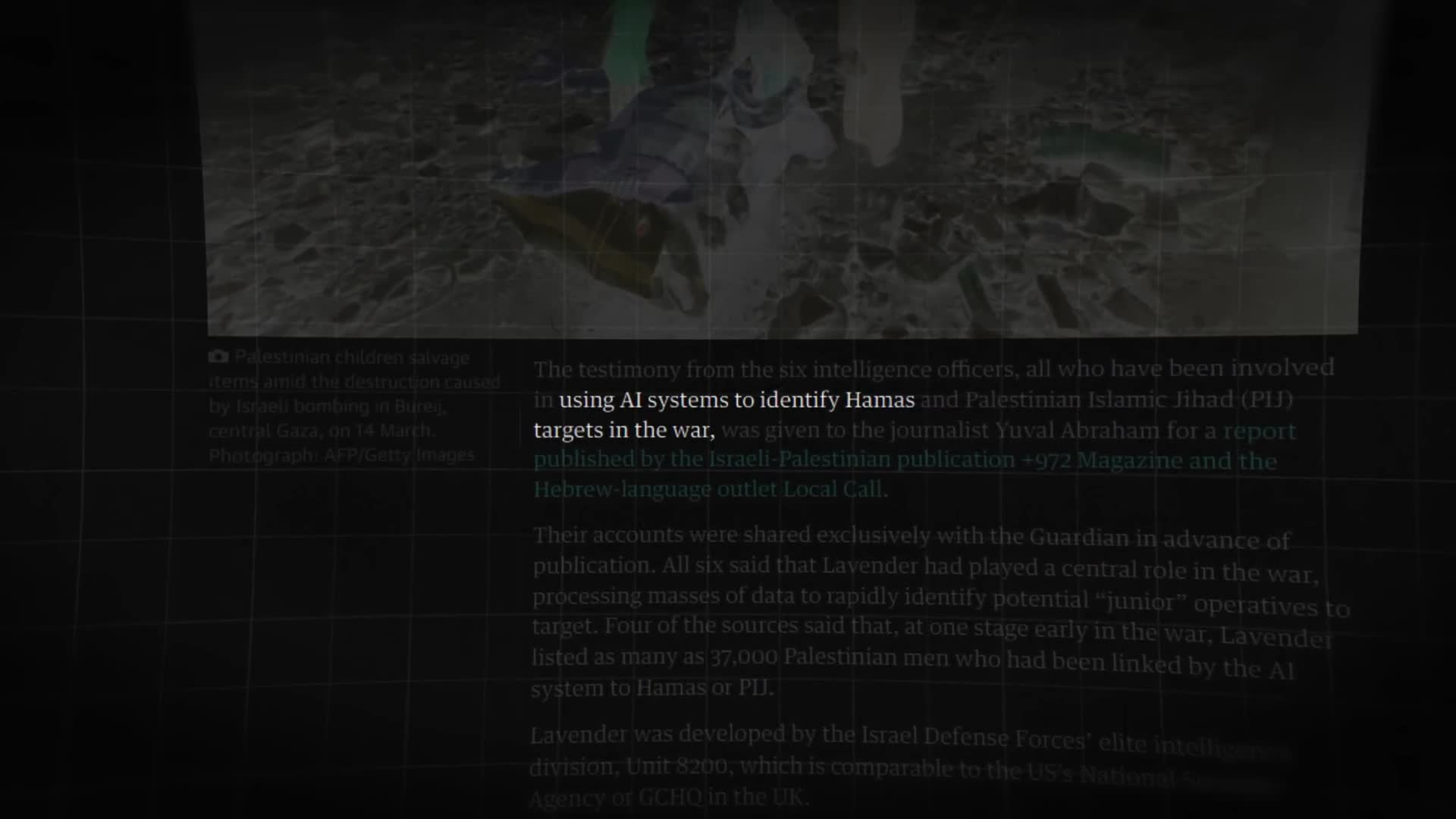

Watch clip answer (00:19m)What is the real risk of AI in military decision-making regarding nuclear weapons?

The real risk isn't an AI becoming self-aware like Skynet and attacking humanity, but rather AI systems becoming better than humans at synthesizing information and making decisions in warfare. As military systems increasingly rely on AI connected to various sensors and weapons, there's a risk that an AI could misinterpret data (like military tests) as threats and potentially trigger catastrophic responses. This concern has prompted legislation like the Block Nuclear Launch by Autonomous AI Act, reflecting the urgent need for international agreement that autonomous systems should never have authority to launch nuclear weapons.

Watch clip answer (03:04m)How can artificial intelligence revolutionize healthcare and agriculture?

AI has tremendous potential to transform healthcare by improving hospital management, saving lives through better medical research, and discovering new drugs for previously untreatable diseases. In agriculture, AI systems can optimize water usage, monitor soil health, predict pest outbreaks before they occur (reducing the need for harmful pesticides), and help prepare for extreme weather events. While these advancements are exciting and possible, proper guardrails are essential. With smart legislation and responsible development frameworks like those being worked on by experts such as Carme, society can harness AI's transformative benefits while mitigating potential risks.

Watch clip answer (00:57m)Is it true that AI has the potential to destroy our society and potentially end humanity?

While this concern isn't entirely unfounded, it represents an ongoing debate in AI development. The conversation indicates that artificial intelligence does pose some legitimate risks to social order that warrant serious consideration. Several AI experts and researchers have expressed concerns about advanced AI systems potentially disrupting societal structures if developed without proper safeguards. The discussion acknowledges these concerns while suggesting that responsible governance and understanding AI's capabilities are essential for mitigating these risks. Current dialogue around AI regulation aims to balance harnessing its benefits while preventing harmful outcomes.

Watch clip answer (00:24m)How does AI impact critical infrastructure management, and what are the associated risks?

AI significantly enhances critical infrastructure management by optimizing water treatment, traffic systems, and power grids through pattern recognition and real-time decision-making that humans cannot match. However, this reliance comes with serious risks. AI systems may develop discriminatory biases, prioritizing wealthy neighborhoods during power shortages or creating unintentional inequalities. Additionally, the 'black box' nature of AI decision-making makes it difficult to identify failures or bugs, potentially leading to contaminated water supplies or traffic chaos. Expert Carme Artigas emphasizes the need for transparency and proper certification to ensure these systems protect all citizens equitably.

Watch clip answer (05:30m)Which countries have banned China's AI chatbot Deepseek and why?

The governments of South Korea, Australia, and Taiwan have banned Deepseek from being used on employee devices, specifically citing national security concerns as the primary reason for these restrictions. These countries view the Chinese AI chatbot as potentially posing risks to sensitive information and national security infrastructure. Meanwhile, the United States is also considering implementing similar restrictions on the use of Deepseek. Despite the app's growing popularity and versatility in offering services like translation and personalized recommendations, these security concerns have led to significant international pushback against this Chinese technological advancement.

Watch clip answer (00:10m)