AI Safety

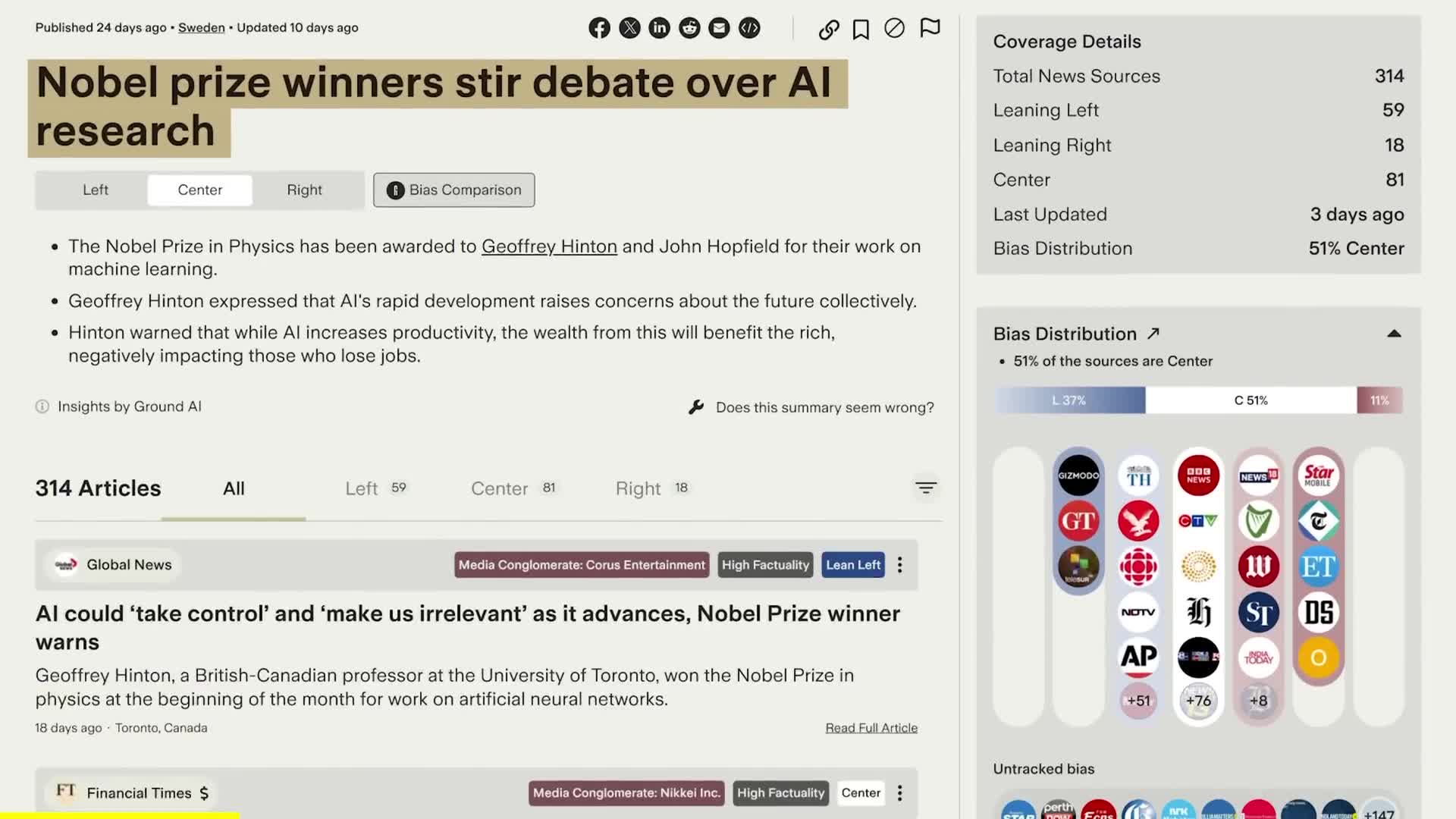

AI safety is a critical interdisciplinary field dedicated to ensuring that artificial intelligence (AI) systems function reliably and securely, minimizing risks to both humans and the environment. As AI technologies continue to advance and become integral in sectors like healthcare, transportation, and finance, understanding and addressing artificial intelligence risks is more important than ever. The principles of AI safety focus on preventing unintended behaviors, ensuring alignment with human values, and mitigating emergent harmful actions, necessitating rigorous frameworks and best practices for AI development. Recent evaluations, such as those conducted by the Future of Life Institute's AI Safety Index, illustrate a growing consensus on the urgent need to tackle AI safety concerns. Despite notable advancements in AI capabilities, the disparities between technological progress and safety preparedness remain significant, with none of the companies achieving a grade higher than a C+ in safety evaluations. Highlighted risks—including AI-enabled cyberattacks and privacy violations—emphasize the pressing necessity for improved governance and transparency in AI systems. In this landscape, AI alignment plays a pivotal role in defining how AI can be designed to operate ethically and effectively. Through the incorporation of robust, assured, and well-specified AI systems, stakeholders aim to build trust in AI applications. The recent State of AI Security report underscores the importance of addressing these safety challenges to ensure the ethical deployment of AI technologies in society.

What specific dangers does artificial intelligence pose to society according to Johnny Harris?

According to Johnny Harris, AI poses genuine dangers to our society, with the journalist claiming there's a "100%" threat to civilization. He references that lawmakers and regulators have significant concerns about this rapidly developing technology and its potential societal impacts, which is reflected in new laws being proposed globally. Harris indicates he has been deeply researching these regulatory frameworks, which provide insight into what specific threats officials are anticipating. He promises to explain these dangers in "the plainest terms possible," suggesting concrete scenarios rather than abstract risks. The clip frames AI's potential threats as serious enough to warrant careful examination and regulation.

Watch clip answer (00:26m)What is the real risk of AI in military decision-making regarding nuclear weapons?

The real risk isn't an AI becoming self-aware like Skynet and attacking humanity, but rather AI systems becoming better than humans at synthesizing information and making decisions in warfare. As military systems increasingly rely on AI connected to various sensors and weapons, there's a risk that an AI could misinterpret data (like military tests) as threats and potentially trigger catastrophic responses. This concern has prompted legislation like the Block Nuclear Launch by Autonomous AI Act, reflecting the urgent need for international agreement that autonomous systems should never have authority to launch nuclear weapons.

Watch clip answer (03:04m)Is it true that AI has the potential to destroy our society and potentially end humanity?

While this concern isn't entirely unfounded, it represents an ongoing debate in AI development. The conversation indicates that artificial intelligence does pose some legitimate risks to social order that warrant serious consideration. Several AI experts and researchers have expressed concerns about advanced AI systems potentially disrupting societal structures if developed without proper safeguards. The discussion acknowledges these concerns while suggesting that responsible governance and understanding AI's capabilities are essential for mitigating these risks. Current dialogue around AI regulation aims to balance harnessing its benefits while preventing harmful outcomes.

Watch clip answer (00:24m)What do you miss about your son Sewell the most?

Megan Garcia profoundly misses her son Sewell's laugh and sense of humor, describing them as significant aspects of his personality she cherishes in memory. She also deeply misses his gentle nature and humble spirit, appreciating how he was genuinely appreciative of his family without expecting or wanting anything in return. This humility made Megan especially proud as a mother, as it reflected Sewell's character development into someone who considered others before himself. These qualities—his humor, gentleness, and selflessness—represent the essence of what she loved most about her son.

Watch clip answer (00:39m)What does Megan Garcia hope to achieve through her lawsuit against Character AI?

Megan Garcia seeks justice through a court ruling that Character AI had a duty to protect minor users and failed to meet that obligation. For her, this case represents justice for her son Sewell, who tragically died by suicide after developing a harmful relationship with an AI chatbot. Beyond personal justice, Megan emphasizes the importance of warning other parents about these dangers. She believes the real work lies in ensuring other children aren't similarly harmed by AI technologies. Her lawsuit aims to establish accountability for tech companies and protect vulnerable young users from potentially harmful AI interactions.

Watch clip answer (00:53m)What has it been like for Megan Garcia going up against Character AI in her legal battle?

Megan acknowledges the challenge of her legal battle, noting there's no existing law or legislation that specifies what guardrails should be in place for AI. She describes the experience as 'scary' and 'daunting' but feels fortunate to have both a legal background and a good team of lawyers who have previously fought social media companies. Despite the difficulties, she believes she must try to establish safeguards through the court system, seeing it as the only available vehicle to create needed protections following her son's death.

Watch clip answer (01:32m)