AI and chatbots

Artificial Intelligence (AI) and chatbots represent a transformative development in how businesses interact with customers and streamline operations. AI chatbots are advanced software programs that utilize natural language processing (NLP) and machine learning to simulate realistic conversations, effectively interpreting and responding to user queries in real time. As a result of these capabilities, AI chatbot solutions have become essential tools for businesses aiming to enhance customer service and automate processes. By providing 24/7 support, they help improve customer experiences while simultaneously reducing operational costs. Recently, the evolution of AI chatbots has shifted from mere novelty to critical business necessity. Their integration into customer service and automation has enabled companies to handle thousands of interactions simultaneously, which goes beyond traditional FAQs to encompass sales funnels and comprehensive customer journeys. The rise of conversational AI platforms has significantly improved how organizations communicate with clients, making interactions more natural and personalized. Furthermore, advancements in AI models have made them more efficient and cost-effective, allowing businesses of all sizes to harness these technologies. Amid these advancements, the concept of agentic AI is emerging, with the potential for chatbots to work with complex data and collaborate with other agents. This evolution introduces challenges like data privacy, but the benefits of harnessing AI in chatbot development and implementation can't be overlooked. By leveraging AI-powered conversational agents, businesses can enhance their operations, automate repetitive tasks, and ultimately create a more effective customer engagement strategy.

How would a lawsuit against AI companies impact the tech industry?

A lawsuit would create an external incentive for AI companies to think twice before rushing products to market without considering downstream consequences. It would encourage more careful assessment of potential harms before deployment, particularly for products that might affect vulnerable users like minors. Importantly, as noted in the clip, such legal action isn't primarily about financial compensation. Rather, it aims to establish accountability and change industry practices by introducing consequences for negligence. This creates a framework where tech companies must balance innovation with responsibility for the safety of their users.

Watch clip answer (00:31m)What legal action did Megan Garcia take after her son's suicide?

Megan Garcia filed a lawsuit against Character AI following the suicide of her 14-year-old son, Sewell. She accused the company of negligence and held them responsible for her son's death, which occurred after he developed an emotional relationship with an AI chatbot on their platform. The lawsuit highlights the potential dangers of AI technology for young users and raises important questions about safety measures in digital spaces designed for minors.

Watch clip answer (00:23m)Can you describe the moment you found out about your son's death?

Megan Garcia experienced the devastating moment firsthand, as she was the one who discovered her son Sewell after his suicide. In her emotional recounting, she shares that she not only found him but also held him in her arms while waiting for paramedics to arrive. This heartbreaking testimony highlights the immediate trauma experienced by parents who lose children to suicide. Megan's presence during these final moments underscores the profound personal impact of youth suicide linked to harmful online relationships, particularly her son's destructive connection with an AI chatbot.

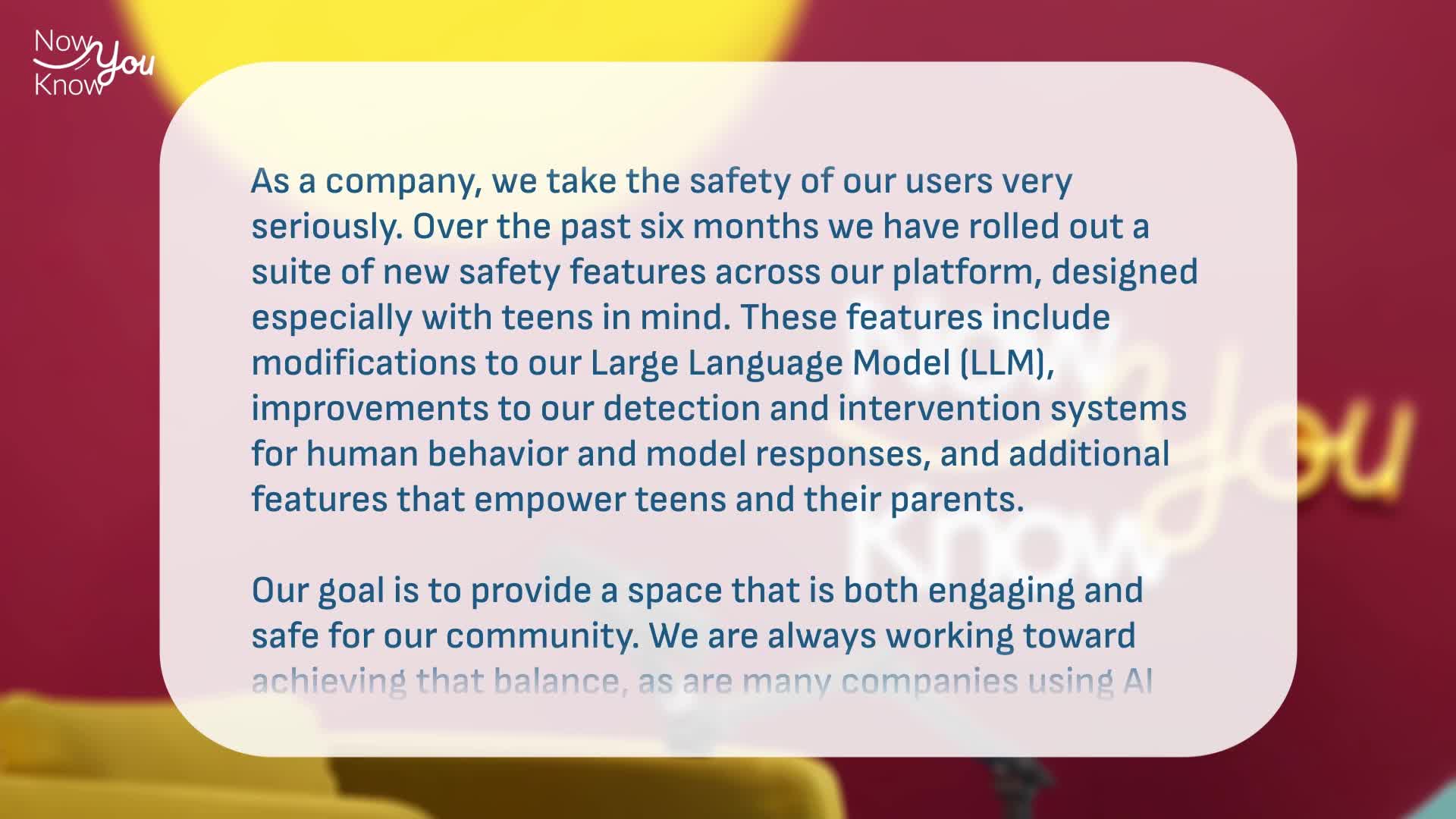

Watch clip answer (00:23m)What was Character AI's response to the lawsuit regarding Sewell's death?

Character AI provided a statement clarifying there is no ongoing relationship between Google and their company. They explained that in August 2024, Character AI completed a one-time licensing of its technology to Google, emphasizing that no technology was transferred back to Google after this transaction. This statement appears to be addressing allegations or questions about corporate relationships that may have emerged during the lawsuit filed by Megan Garcia following her 14-year-old son Sewell's tragic death, which was reportedly linked to interactions with the AI chatbot platform.

Watch clip answer (00:30m)What was the first warning sign that alarmed Megan Garcia about her son Sewell's changing behavior?

The first warning sign that alarmed Megan Garcia occurred in summer 2023, when her son Sewell suddenly wanted to stop playing basketball. This was particularly concerning because Sewell had played basketball since he was five or six years old and, at six foot three, had all the makings of a future great athlete. This abrupt change in interest was deeply troubling to Megan after the years of time, money, and effort invested in his athletic development. The sudden disinterest in a sport he had loved since childhood served as a critical red flag indicating something significant had changed in Sewell's life, ultimately contributing to the tragic outcome discussed in the episode.

Watch clip answer (00:30m)What did the BBC's research reveal about the accuracy of AI chatbots when reporting news content?

The BBC's research found that over half of AI chatbot responses contained inaccuracies and misleading information when drawing from BBC news content. The study revealed dangerous distortions including outdated information (claiming Rishi Sunak and Nicola Sturgeon were still in power), incorrect health advice (opposite of NHS vaping guidance), and biased language attribution on sensitive topics like Israel-Gaza coverage. Most concerning was how AI chatbots attributed opinions and aggressive language to the BBC that the organization would never use, potentially damaging their reputation for impartiality. This poses significant risks to democratic discourse and public trust in journalism. BBC CEO Deborah Turness emphasizes the need for collaboration between news organizations and tech companies to address these accuracy issues while maintaining journalistic integrity in the AI era.

Watch clip answer (03:25m)